Vulnerability Management Automation Best Practices

As cyber threats evolve faster than human analysts can respond, managed service providers (MSPs), managed security service providers (MSSPs), and centralized enterprise security operations teams are turning to automation to keep pace with the scale and velocity of modern vulnerabilities. Manual scanning, triage, and patching are no longer sustainable when thousands of CVEs emerge each year, and client infrastructures span hybrid, multi-cloud, and containerized environments.

Vulnerability management automation bridges this gap by combining continuous scanning, contextual risk scoring, and intelligent remediation workflows, allowing service providers to move from reactive patching to proactive risk reduction. When implemented correctly, automation improves security outcomes and gives teams back their time, often even their evenings and weekends.

This article explores the key pillars of automated vulnerability management, from risk-based prioritization and continuous asset discovery to AI-driven predictive defense. It highlights actionable best practices, common implementation challenges, and real-world examples to help technical professionals design and operate scalable, resilient, and compliance-ready vulnerability management pipelines.

Summary of key vulnerability management automation best practices

{{banner-large-1="/inline-cards"}}

Prioritize vulnerabilities using risk-based frameworks

A risk-based framework helps MSPs and MSSPs avoid unproductive approaches (like “patch everything”) and instead concentrate on vulnerabilities that create the highest likelihood of compromise. To make this actionable, a prioritization engine must combine CVSS scores with concrete operational context—such as exploit availability, external exposure, business-critical workloads, and existing security controls—so that every remediation decision maps directly to risk reduction.

A practical implementation begins with data collection. The framework must ingest threat intelligence feeds (e.g., Exploit-DB and Metasploit modules), vulnerability scan results, cloud metadata, and CMDB- or EDR-backed asset inventories. For instance, when a platform observes that CVE-2023-23397 has public proof-of-concept exploits and is being actively exploited, it should automatically boost its priority, but only on assets where the vulnerable component is installed and reachable from untrusted networks. This prevents widespread “false urgency” and directs attention where it matters.

Next, the system assigns dynamic risk scores by weighing contextual factors. A simple but effective model might be like the following:

- Base severity: CVSS v3.1 score

- Exploitability: Known weaponization, public PoC, or exploitation in the wild

- Asset criticality: Data classification, business function, revenue impact

- Exposure: Internet-facing, internal, segmented, or protected by compensating controls

- Operational considerations: Patch complexity, downtime requirements, or availability of mitigations

For example, a CVSS 6.5 deserialization vulnerability on a public-facing authentication service with an available Metasploit module may be assigned a higher priority than a CVSS 9.1 local privilege escalation affecting non-persistent CI runners that rebuild every hour.

Automate continuous data scanning

Continuous, automated scanning is essential for maintaining full visibility across an MSSP’s diverse client environments. Modern infrastructures—especially cloud-first or hybrid deployments—produce constant asset churn via short-lived containers, autoscaled instances, ephemeral development environments, and new SaaS integrations. Without continuous scanning and dynamic asset discovery, these transient resources become blind spots where unmonitored vulnerabilities accumulate. Automated discovery tooling (using, for example, cloud API polling, agent-based telemetry, and network probes) ensures that new or previously unknown assets are enrolled into scanning workflows the moment they appear, preventing “shadow IT” from slipping through coverage gaps.

To deliver this effectively at MSSP scale, several operational challenges must be addressed:

- Multi-tenancy across heterogeneous environments: MSSPs often manage dozens or hundreds of clients, each with unique networks, cloud providers, data classifications, and scanning policies. A shared scanning fabric must logically isolate each tenant’s data, apply tenant-specific rules, and support multiple scan engines without cross-contamination. For example, a healthcare client may require daily authenticated scans of PHI-processing workloads with strict segmentation controls, while a fintech client may only permit API-level cloud posture scans. The platform must orchestrate these differences automatically.

- Maintaining consistent SLAs for scan coverage: Service-level agreements typically mandate scan freshness (e.g., “no asset older than 72 hours since last scan”). Enforcing this across multi-tenant environments requires intelligent scheduling that respects client windows, rate limits, and network constraints. If a client’s cloud account spawns 40 new instances during an overnight deployment, the system must automatically queue and execute scans for all new assets before SLA deadlines without delaying other tenants whose SLAs are also ticking.

- Avoiding performance degradation from frequent scans: Repeated unauthenticated scans or aggressive port sweeps can saturate client networks or impact production systems. A robust design uses adaptive throttling, dynamic authentication, differential scanning (scanning only changed components), and prioritization queues based on asset criticality. Scans should automatically fall back to lightweight probes when network load is high.

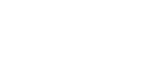

Consider an MSSP (see figure below) managing two tenants: Client A, a healthcare provider with a 48-hour scan SLA; and Client B, a SaaS company with a 24-hour SLA. During a peak deployment window, Client B launches a burst of new container hosts. The platform detects the new assets via cloud API events and schedules immediate scans to maintain the 24-hour SLA. However, to prevent network congestion, it rate-limits scanning intensity and temporarily defers non-critical rescan jobs for Client A while still ensuring that Client A’s 48-hour SLA remains intact. Tenant isolation ensures that Client B’s high-volume cloud activity does not impact Client A’s PHI-restricted environment.

Automated data scanning with multi-tenancy support provided by Cyrisma, which delivers continuous, automated data scanning across dynamic environments, and ensures no asset becomes a blind spot. Its intuitive dashboard visualizes scan trends, highlights emerging risks, and prioritizes critical targets for faster, safer remediation.

Integrate threat intelligence for real-time risk context

Real-time threat intelligence enrichment enables MSSPs to correlate raw vulnerability scan results with active exploitation trends, helping analysts focus on issues that adversaries are weaponizing right now. By automatically pulling data from sources such as CISA KEV, MITRE ATT&CK, and Exploit-DB, platforms can elevate the risk score of vulnerabilities that pose immediate danger and suppress noise from those that are theoretical or outdated. Key components for threat intelligence integration are summarized in the table below.

{{banner-small-1="/inline-cards"}}

Automate patch and remediation workflows

Automating patch and remediation workflows is critical for scaling modern vulnerability management programs, especially within MSSP platforms that support dozens or hundreds of tenant environments. Timely patching directly reduces exploit windows, but manual coordination across diverse systems, teams, and change-control processes often leads to delays. Automation brings consistency, reduces operational overhead, and frees analysts from repetitive triage and ticket-creation tasks.

Despite its importance, patch automation remains challenging. Environments vary widely in terms of operating systems, package managers, deployment pipelines, maintenance windows, and business constraints. Not all vulnerabilities can be remediated with a simple patch because some require dependency checks, configuration changes, or compensating controls. Remediation carries operational risk, making context and accuracy essential for safe automation.

AI-driven remediation helps bridge these gaps by generating personalized recommendations based on asset configuration, software versions, historical patch behavior, and tenant-specific constraints. Rather than generic “apply patch X” instructions, AI can propose tailored sequences, identify prerequisites, assess regression risk, and adapt workloads based on criticality and exposure. This contextual reasoning makes automated workflows safer and more precise.

Key requirements for effective automated patch and remediation workflows include:

- Accurate asset–vulnerability mapping to determine exactly which systems are affected and what patches or controls apply

- Dependency and impact analysis to avoid breaking services during updates

- Support for multi-step remediation, including configuration changes, credential rotation, or compensating controls

- Integration with ITSM, CI/CD, and orchestration tools for seamless ticketing, approvals, and automated execution

- Rollback and validation mechanisms to verify successful remediation and ensure business continuity

Implement continuous monitoring and verification

Continuous monitoring and verification ensure that vulnerability management does not stop once a patch is issued or a remediation action is executed. In an MSSP context, where environments are diverse, constantly changing, and often managed at scale, ongoing validation is essential to confirm that vulnerabilities are truly resolved, new issues are promptly detected, and client environments remain compliant with security baselines. This involves continuously collecting telemetry from scanners, agents, configuration stores, and orchestration systems, then correlating that data to maintain an accurate, up-to-date view of risk.

This matters because patching and remediation workflows can fail silently or drift over time. Systems may miss updates, revert configurations, or fall out of compliance due to deployment errors, user changes, or emerging threats. At MSSP scale, even small visibility gaps can lead to prolonged exposure windows or inaccurate reporting. Yet achieving continuous verification is challenging: Environments are fluid, systems frequently reboot or scale up/down, and data sources may update at different cadences. Ensuring correctness across tenants requires robust correlation logic, noise reduction, and automation that adapts to real-world operational variance.

The following table summarizes key actionable requirements for MSSP software in continuous monitoring and verification.

Generate actionable vulnerability reports and dashboards

In a modern MSSP environment, raw vulnerability data is only as useful as the insights it drives. Automated reports and dashboards transform scattered scan results, patch statuses, and remediation actions into clear, actionable intelligence. The goal is not just to show “what’s broken” but to highlight where to act next, prioritize high-risk assets, and measure the effectiveness of your vulnerability management program in real time.

Actionable vulnerability reports should include the following data,

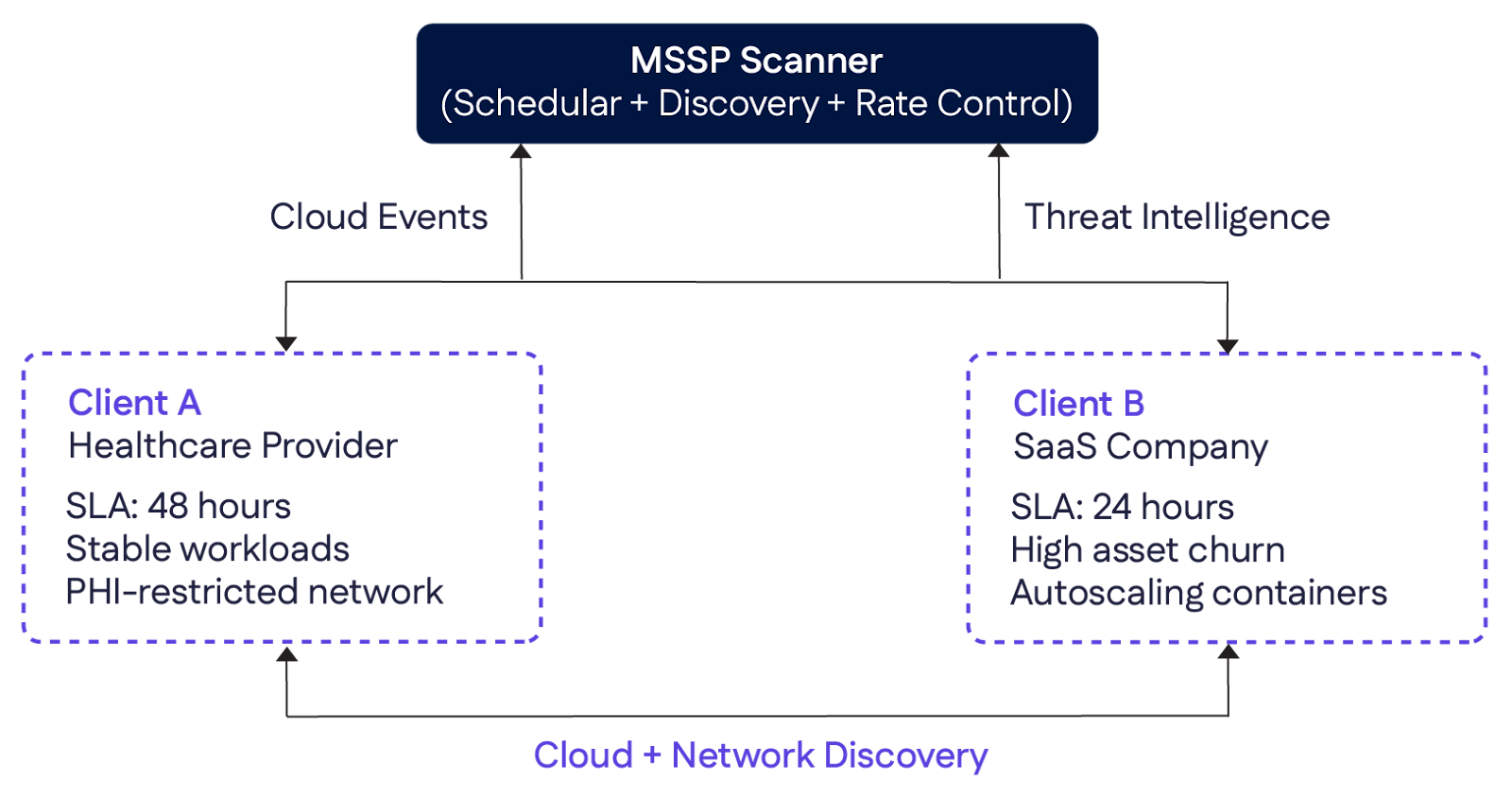

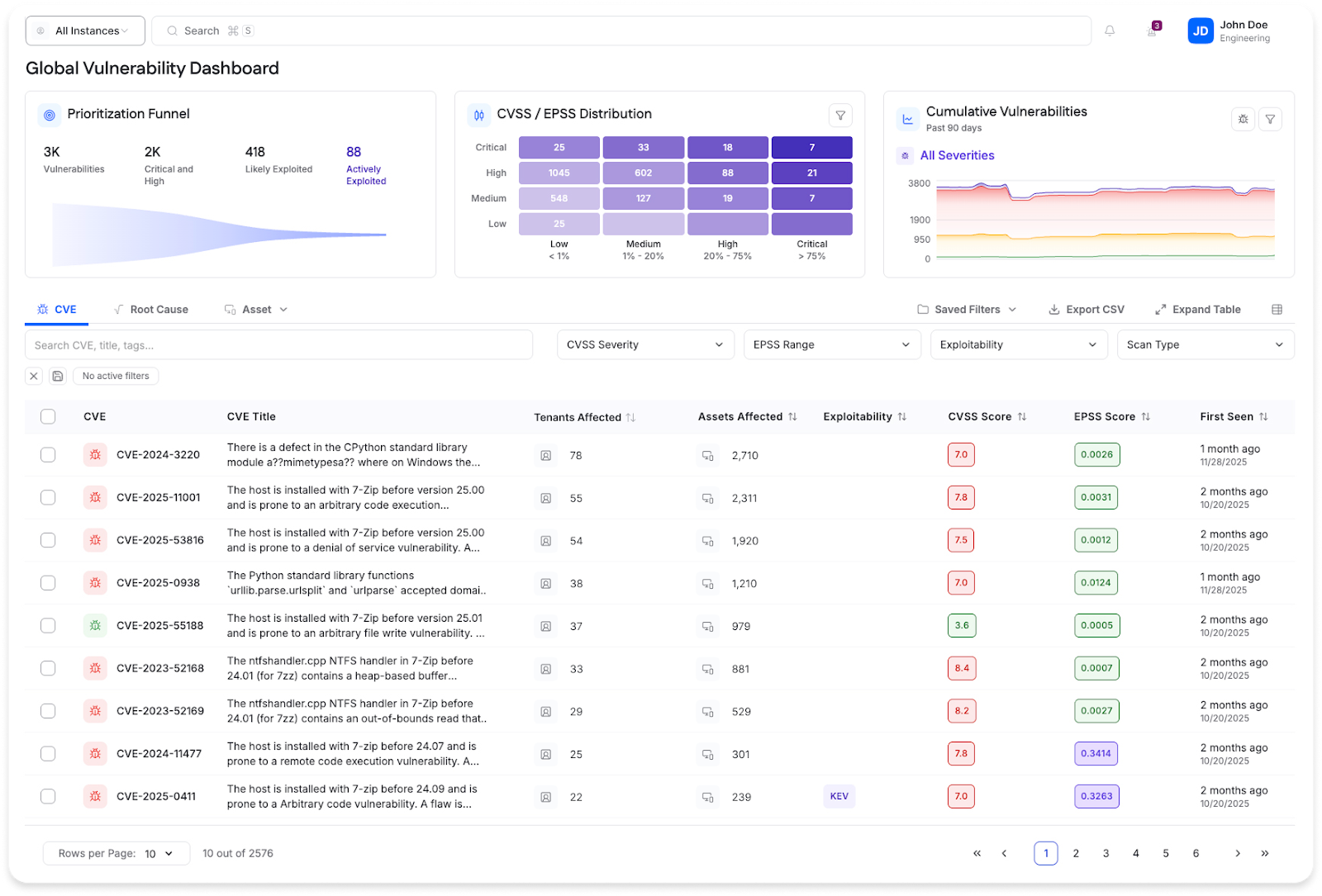

Actionable dashboards go beyond static numbers: They use automation and AI to filter noise, highlight critical paths for remediation, and suggest next steps. Analysts can quickly see which vulnerabilities are actively exploitable, which patches failed to deploy, and which assets need urgent attention. Summary dashboards let executives track overall risk reduction and compliance posture, giving confidence that security efforts are producing measurable results.

Cyrisma’s vulnerability dashboard turns raw scan data into clear, actionable insights by highlighting high-risk assets, trending issues, and remediation progress. Interactive profiles and detailed overviews help analysts quickly understand where to focus their efforts and what actions will have the highest impact. The interface delivers real-time visibility into program effectiveness, guiding MSSPs on what to fix next and how their security posture is evolving.

Align automation with compliance and governance requirements

In the MSSP world, vulnerability management isn’t just about closing security gaps; it’s about proving that you’re doing it the right way. Automation can take this from a headache to a strategic advantage by mapping technical findings directly to regulatory and governance frameworks like PCI-DSS, HIPAA, or NIST 800-53. Instead of manually translating scan results into compliance language, automated workflows can categorize vulnerabilities, track remediation, and produce audit-ready reports that speak the regulator’s language.

The tricky part is that compliance is never one-size-fits-all. A single vulnerability may impact different controls depending on the client, environment, or regulatory regime. Multi-tenant MSSPs need automation that can handle these variations seamlessly, ensuring that every scan, patch, or configuration change aligns with the right governance requirements. At the same time, every automated action, whether a patch deployment or a remediation ticket, needs a verifiable audit trail, so you can prove exactly what was done, when, and why.

When executed well, compliance-aligned automation makes audits almost effortless. For instance, a scan could automatically map vulnerabilities to the relevant NIST control families and generate a quarterly report ready for client review. This approach turns raw security data into actionable governance insights, letting MSSPs demonstrate measurable compliance while keeping remediation workflows running smoothly and efficiently.

Cyrisma’s compliance dashboard maps scan results directly to leading frameworks like NIST, HIPAA, and CIS Controls, giving MSSPs a clear view of where they stand against regulatory requirements. The interface highlights pass/fail checks, control gaps, and remediation progress so teams can quickly understand compliance risk across tenants.

Enable AI and feedback loops for predictive defense

Automation doesn’t have to be reactive, and when paired with AI and continuous feedback loops, vulnerability management can become predictive. Instead of waiting for a vulnerability to be exploited or a patch to fail, AI can analyze historical data, exploit trends, and asset criticality to anticipate which systems are most at risk and suggest remediation before issues escalate. This shifts MSSPs from firefighting to proactive defense, reducing exposure windows and improving overall security posture.

Implementing predictive defense comes with its challenges. Models need high-quality input from patch histories, scan results, configuration changes, and even threat intelligence feeds. Feedback loops must continuously refine predictions based on the success or failure of previous remediations, newly discovered vulnerabilities, and evolving attack patterns. Multi-tenant environments add complexity: AI must learn across diverse client infrastructures while respecting each tenant’s operational constraints and priorities.

When done right, AI-driven feedback loops can do more than flag risks; they can prioritize them, suggest tailored remediation sequences, and highlight patterns that might otherwise go unnoticed. For example, if certain workloads consistently fail patch deployments or show recurring misconfigurations, AI can recommend scheduling changes, dependency checks, or preemptive fixes to prevent escalation.

{{b-table="/inline-cards"}}

Conclusion

Effective vulnerability management no longer depends solely on manual processes or periodic scans. It requires continuous, intelligent automation that can adapt to the dynamic nature of modern infrastructures. For MSSPs, automation is about delivering consistent, measurable risk reduction across diverse client environments while maintaining compliance and visibility at scale.

In the end, the goal of vulnerability management automation is not to replace human expertise but to augment it, allowing analysts to focus on strategic risk decisions while automation handles the repetitive, time-sensitive tasks. When properly designed and governed, an automated vulnerability management pipeline becomes a cornerstone of proactive cybersecurity defense for both MSSPs and their clients.